Transprecision Computing: Redefining Performance and Efficiency.

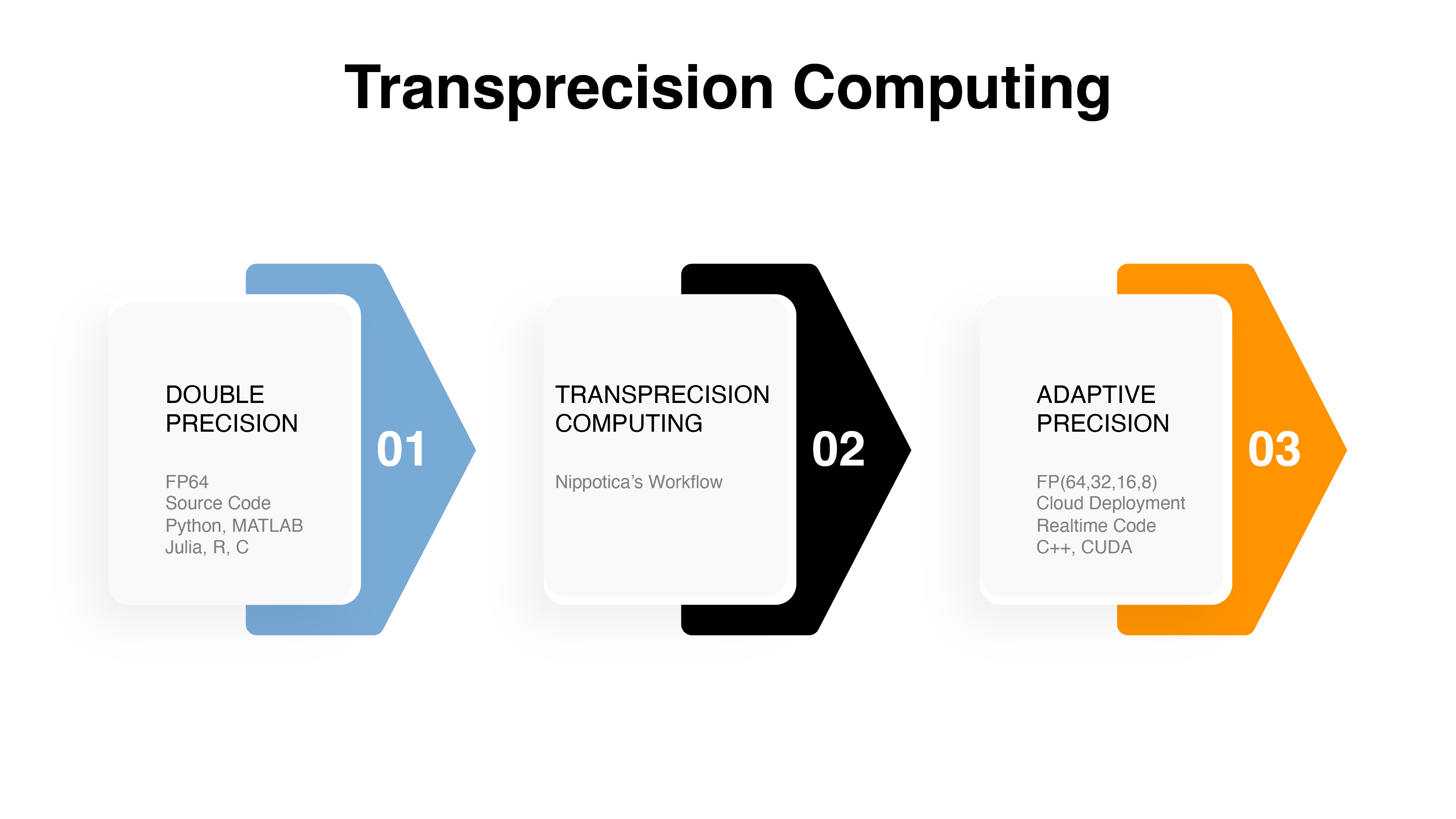

Modern processors, especially NVIDIA’s, have evolved into highly flexible engines capable of running computations in anything from 8-bit to 64-bit floating-point precision. Not just fixed-point integers, but fully adaptable precision floating-point arithmetic. That flexibility unlocks enormous potential across industries—scientific computing, quantitative finance, and computer vision, to name a few.

Yet most software is still written as if we’re stuck in the old days of fixed 64-bit precision.

That’s where Nippotica comes in. We bring the art of precision balancing—once a necessity in chip design—into the world of high-performance computing. By rewriting key parts of software to take advantage of adaptive precision, we make computation leaner, faster, and more efficient. The process is rigorous, but the results are worth it.

How Nippotica Makes Your Code Faster (Without Breaking Anything)

Most developers write code with one goal: make it work. At Nippotica, we take it a step further: make it work faster. And not just a little faster—we optimize the math at its core so your software runs leaner, sharper, and more efficiently. The result? Fewer wasted computing cycles, lower cloud costs, and the same accuracy where it matters. Let’s face it—no one wants their code to be the reason the cloud bill gives the CFO indigestion.

Here’s how we do it.

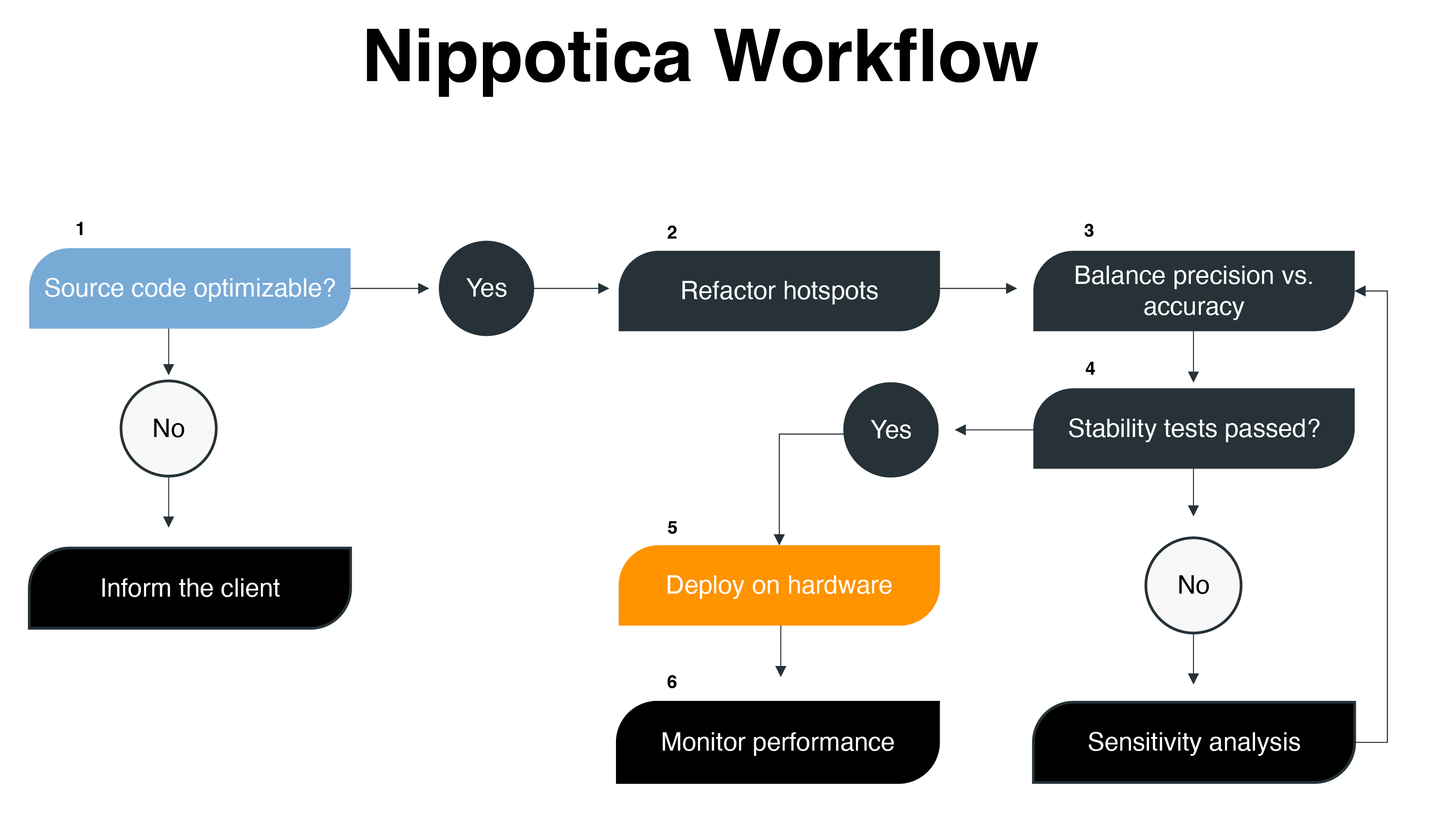

The Workflow

How Nippotica's Transprecision Computing Works.

Step 1: Can Your Code Be Optimized?

First, we take a hard look under the hood, at your source code—Python, Matlab, R, Julia, it doesn’t matter. What matters is the math inside. If your code is already as efficient as it gets, we’ll tell you. No vague promises. No unnecessary changes. But if there’s room for improvement, we move forward.

Step 2: Rewrite the Hotspots

Not all parts of your code are created equal. Some lines execute once and never again. Others are stuck in endless loops, running millions of times per second. Those hotspots are our focus. We rewrite them in C++, strip out inefficiencies, and set them up for adaptive precision.

Step 3: Adaptive Precision = Better Performance

Most developers default to double precision (FP64) because it’s the safe, easy choice. But easy isn’t always smart. Many algorithms don’t need that much precision. By carefully adjusting precision—using FP32, FP16, or even FP8 where possible—we free up processing power without sacrificing accuracy. In one case, switching to FP16 for a neural network inference task saved 30% in cloud costs without affecting model performance.

This isn’t about cutting corners. It’s about cutting waste.

Step 4: Stability Testing (a.k.a. No Surprises Later)

Before anything goes live, we stress-test the code to make sure it holds up. If it breaks? We tweak. If lowering precision creates instability? We adjust. The goal is to find the sweet spot where performance improves without affecting results.

Step 5: Deploy on High-Performance Hardware

Once we’ve optimized the math, it’s time to put it to work—whether that’s on AWS, Azure, Google Cloud, or an edge device like an NVIDIA Jetson Orin. The goal is always the same: maximize speed, minimize computational waste. Our team includes HPC engineers who’ve worked on everything from weather simulations to computer vision algorithms.

Step 6: Monitor, Measure, and Keep It Running Smoothly

Optimization doesn’t stop at deployment. We monitor real-world performance, track execution times, and make adjustments if needed. If all goes well (and it usually does), your software is now running faster, smarter, and more efficiently than ever.

Why Nippotica’s Workflow Matters

Computing power isn’t cheap. And in industries like finance, AI, and engineering, speed is everything. At Nippotica, we don’t just make software run faster—we make it smarter. Every floating-point operation is done at the right level of precision—no more, no less.

The result? The same accuracy, at a fraction of the computational cost.

Want to see what this looks like in action? Let’s talk and figure out how much performance you’ve been leaving on the table.